Vision Improves with 3-D

Digital 3-D cameras are extending the potential of existing 3-D imaging technology for robotic guidance and material handling systems. Equipped with integrated time of flight (ToF) sensors, these cameras deliver rapid spatial capture of objects and components. Three-dimensional cameras quickly provide data on target dimensions and position with a single snapshot that captures and displays both a distance and a gray-scale image.

These cameras are used on robotic arms, in robotic pick-and-place systems, on automated material handling lines and in logistics systems where machine vision is essential. The cameras can be used for volume and area identification, part location and positioning, presence and completion checks, palletizing, luggage and package sorting, and collision detection. Industries potentially using these 3-D cameras include automotive assembly, warehousing, packaging and security surveillance.

Capturing 3-D images in automated industrial systems is particularly important when the dimensions or the exact location of objects are unknown. For example, in robotic applications, parts are picked up from inexact locations, and then must be accurately placed in a target location such as a pallet or on floor space in a warehouse. In logistics systems where thousands of packages are classified or airports where cases and bags are sorted and controlled, spatial images are critical to the success of the system.

A 3-D camera can measure the distance for each pixel in parallel, as well as provide a distance and an intensity or gray scale image simultaneously. Here, 3-D data is evaluated to determine the volume and coordinates. Source: Baumer

Existing 3-D Imaging Techniques

A number of different techniques have historically been used to capture 3-D images. All use different equipment and require various amounts of time to generate a 3-D image that can be analyzed or visualized by a standard PC system.In 1840, Sir Charles Wheatstone developed stereoscopy, one of the oldest 3-D imaging techniques. Present-day industrial machine vision systems still use stereoscopy, which imitates the function of human vision, to capture 3-D images of objects. Stereoscopy involves setting two 2-D cameras slightly apart from each other, typically 50 centimeters to 1 meter, depending on the size of the target.

Real-time applications where the camera acts like a human eye benefit most from 3-D camera technology-such as this top view of a conveyor belt inspection application. Source: Baumer

In structured light projection, a pattern of stripes or arbitrary fringes is projected onto the surface of a target object, and the object’s surface shape causes the light pattern to appear distorted. In order to accurately determine the shape of the object, three to four different patterns must be projected onto the target, a scanning process that can be time consuming. As the patterns are projected, 2-D cameras take images of the light distortion on the object’s surface and send the images back to a PC. Comprehensive software is used to compute the 3-D image based on the displacement of the light patterns.

Structured light projection requires the use of a stripe projector and at least one, but most often two, 2-D cameras positioned effectively around an object. The required equipment takes up a substantial amount of floor space and system integration is complicated. As the scanning process is lengthy, the production or sorting function is prolonged.

In light sectioning, an alternative 3-D imaging technique, laser planes are projected onto an object’s surface. For the scanning process to be completed, either the target object moves through the laser or the laser moves over the object. During the scanning process, a digital camera takes images that show the contour of the target object in that position. After capturing enough lines along the surface, the whole object structure is available for processing.

Software evaluates the observed lateral displacement of the object at all points and calculates the dimensions of the object. Since acquiring all the necessary 3-D images may take several seconds, light sectioning may be too slow a process for time-critical applications.

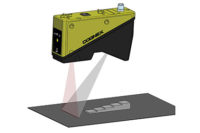

Based on time of flight measurement, as demonstrated here, 3-D cameras are equipped with special lock-in image sensors that read dimensions based on reflected light. Source: Baumer

Digital 3-D Camera Technology

New 3-D cameras are revolutionizing spatial detection of objects and components by rapidly providing information on an object’s dimensions and location using a single image. These cameras have no moving parts and determine the dimensions of a target without requiring a scanning process.Based on ToF measurement, 3-D cameras are equipped with special lock-in image sensors that read dimensions based on reflected light. Light is projected from the camera’s integrated LED light source located around the lens. Using active illumination, light modulated at a well-known frequency is emitted from the camera in a sinusoidal shape. The emitted light is synchronized with the image sensor and reflected at the surface of the target object. The sensor measures distance by calculating the time required for light to travel from the camera to the object and back. The reflected signal is sampled four times in a specified time period, phase-shifted each time by 90 degrees.

Since 3-D cameras can measure the distance for each pixel in parallel, they can provide a distance and an intensity or gray scale image simultaneously with up to 54 full frames per second, depending on the ToF sensor technology specified for the camera.

Real-time applications where the camera acts like a human eye benefit most from 3-D camera technology. In a range of applications, digital 3-D cameras are extending the potential of existing 3-D imaging technology. While various techniques have been used to capture 3-D images, the emergence of 3-D cameras can be a benefit to the vision systems in industries from automotive to security. V&S

Intensity and color-coded distance images are shown for a de-palletizing process. Source: Baumer

Tech Tips

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!