Computed Tomography: Optimizing Large Quantity Automated Measurements

Learn more about automated measurement techniques for large quantity screening applications.

This is a 2D cross section of ruby spheres with spheres fitted to scan data.

The need for nondestructive evaluation on large quantity production components is becoming more achievable with CT technology due to the advances in machine hardware and processing techniques over the past few years. Computed tomography, once a slow, tedious process, has grown into a nearly instant method of data acquisition. The cone beam CT approach paired with ever increasing data communication rates can allow for substantial (and reliable) data sets to be produced much faster; in some cases it can be 15 minutes or less. This article will discuss the use of a calibrated test artifact to demonstrate how scan time affects the ability to perform automated measurement techniques for large quantity screening applications. A series of scan times ranging from five minutes to three hours will have an automated measurement template applied and repeated for five instances. The variation between each of the five measurement outputs will be used to decide the optimal scan time for this object. This type of benchmarking can be used to evaluate the optimal scan times for any component that may require a large inspection quantity. By investing time in the setup, the overall goal is to reduce unnecessary time and cost associated with each of the subsequent scans while still maintaining quality and precision.

Ruby Sphere Assembly Test Setup

A calibrated ruby sphere assembly was utilized for this benchmark study. A total of five scans were performed at the same magnification: five minute scan, 15 minute scan, 30 minute scan, one hour scan and three hour scan. The software was used to define and fit spheres onto the scanned assembly where the measurements were then saved as a template in the established coordinate system. This measurement template can be thought of as virtual CMM probing that can be imported and applied to a registered data set. The distance between each sphere was the reported measurement that was studied.

For each of the scans, the data set was imported into the software and aligned to the specified coordinate system. The measurement template was then applied and the software looked within the user specified search distance to automatically re-fit all of the features that were defined. This process was repeated for a total of five iterations per scan. A standard deviation for the five measurements was calculated and used as a metric for determining which scan time was optimal for this component.

Discussion of the Results

By looking at the standard deviation values for the five iterations under each scan time, it becomes clear that there is a diminishing return effect on the scan time. For this benchmark test, once the scan time reached 30 minutes the standard deviation did not improve significantly thereafter. It is important to note that we are not studying the error in relation to a nominal value. The error of measurements from a CT scanner will change according to the magnification, it is based on many factors inside of the machine such as scan energy, focal distances and manipulator precision. This benchmark test stepped through varying amounts of projections at a 1,000 millisecond exposure in order to create a range of scan times. The reason standard deviation is used as the metric and not the error percentage is due to the fact the error should not change vastly over the range of scan times. Since the magnification was kept constant, the voxel size was consistent throughout the range of scanning. It is assumed that the error can be corrected; this benchmark test is looking to evaluate the precision of the measurement template for specific scan times and not necessarily the accuracy. There are a different set of tests that can be used to evaluate and correct for the error percentage.

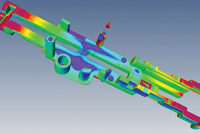

Here is a measurement template created from sphere fit points.

Now that the benchmark testing has been completed, we have a better understanding of the ideal scanning time. If repeatability in the measurement template is the main concern then there is no incentive to scan for longer than 30 minutes. Assuming this were a production part made by the hundreds, the NDT engineer now has an understanding of where to pinpoint the setup to optimize the time and results. These results imply that anything longer than 30 minutes on the scan time is going to give nearly equivalent precision and therefore the optimization process can begin by reducing scans to the 30 minute range. Keep in mind, this is just one tool that can be used to begin optimization, there are many other factors that would influence a scan time but this is a useful place to start.

Industry Applications

For the sake of simplicity and to prove a point, the ruby sphere assembly was chosen. In practice, a need for automated measurements would occur on production components such as plastic injected molded assemblies, additive manufacturing assemblies, castings, PCB components, etc. The overall goal is to sacrifice setup time upfront in order to improve the process down the line. The size, material density and measurement template complexity all play a part in where the standard deviations will start converging. We expected the ruby spheres to converge quickly because they are small in size and require few projections to acquire. However, a 30 minute scan is not the universal answer—it just happened to converge at that value for the spheres. This is not to say you couldn’t perform even quicker scans either. If the tolerances allow, then a higher standard deviation might be acceptable for the application.

Using computed tomography automated measurement techniques for a 100% inspection rate is beginning to gain traction as an efficient and reliable inspection method. It goes beyond typical CMM touch probe inspections allowing the same type of measurements to be performed but on hidden and complex surfaces. Using scan time benchmarks to optimize based on the measurement precision required is just one facet of the overall inspection process but an important aspect to evaluate nonetheless. A few hours of setup invested at the beginning can pay dividends when scanning/inspecting units that exceed hundreds in quantity. This benchmark scan method should be in the arsenal of all NDT engineers and technicians looking to improve or create measurement techniques/dimensioning using computed tomography.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!