Missing the Point: Gage Variability and Operational Definitions

Clear operational definitions can prevent chaos.

Without reliable operational definitions in place, data points may have wildly fluctuating patterns.

The process of analyzing gage variability is often highly structured, involving an examination of the gages themselves for sensitivity to temperature changes, magnetic fields, and other factors. These are the easy ones. The second area of variability has its source in gage operators themselves, who may have different levels of training, experience, fatigue, and even attitude.

Collecting data offers clues to sources of variability. But when this disciplined analysis fails to uncover real reasons for variability, it may be time for the Sherlock Holmes of variability to look at operational definitions—often the most overlooked consideration when evaluating variation among measurement devices.

Elementary, my dear Watson? Perhaps, but nonetheless these definitions can lead to levels of variation in gage output if they are vague or nonexistent. “In the opinion of many people in industry, there is nothing more important for transaction of business than use of operational definitions. It could also be said that no requirement of industry is so much neglected” (Deming, 276). In defining any process, vague language can be the death knell for accuracy.

Just ‘close enough’? For accuracy in measurement results, operational definitions are critical.

Operational Definitions

Because we are used to somewhat loose definitions of tasks, with the expectation that “everyone knows how to do this,” it’s easy to forget the importance of clearly defined instructions for collecting data. It’s one thing to say “Put the groceries away,” and another to specify, “Put cold things in the refrigerator, frozen food in the freezer, and canned goods in the pantry,” if the operator is a child, perhaps. Since everyday tasks such as this do not demand precise measurement, one can afford to be casual about the instructions, defining them in terms of the person carrying them out. In manufacturing and service environments, however, lack of clear and specific operational definitions can create chaos, rendering data that is produced meaningless and outcomes unclear.

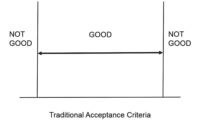

If inspectors are asked to identify defective devices, each will have his or her own sense of “defective.” If they have a clear understanding of a specific characteristic of interest (inaccurate measurement, for example) as well as the method for measuring it and the decision criteria that are to be considered, they are more likely to arrive at the same conclusions about what constitutes “defective.” Is it defective if the label is slightly loose? Or does it have to be blatantly miscalculating measurements in order to be defined as defective?

Sometimes an operational definition may appear to be appropriately focused and clear:

“When measuring the part, hold the gage firmly and tighten the thimble firmly. Measure to 1/16th inch.”

Sounds good, right? But do you know how tight the thimble really should be? Or whether to round up if the measurement is close to 1/16”?

How about this approach to an operational definition:

Setup: Start the gage lab with all eight lights on. The temperature in the lab must be between 73 and 75 degrees Fahrenheit, with 20-30% humidity. All parts to be measured must be in the lab for a minimum of 30 minutes prior to any measurements being taken, to assure uniform temperature.

When measuring tubes up to 3”, hold the 0 – 3” micrometer at a right angle to the tube or use the gage fixture. The Anvil and Spindle will be perpendicular to the tube.

Tighten the thimble until the slipping clutch clicks.

Measure to the nearest 16th of an inch. Round up if the measurement falls between scales.

This detailed operational definition helps to assure that all operators approach the task in the same way, reducing the levels of variation among them.

In another example, the directive, “Gages must be checked at regular intervals” invites chaos. What does the “checking” entail? It might be only glancing at the inventory to make sure gages are in the right place. And regular intervals could mean anything from every hour to once a year on my birthday. When operators are left to create their own definitions and understandings, the outcomes will not be reliable.

Establishing an operational definition for “checking” may include a description of the instrument that is used. (Naked eye? Camera? Historical record?) It may entail actually picking up the instrument, or taking additional steps to weigh it or assess its accuracy. Without specific information, one might assume that “checking” might mean just verifying that it is in the inventory. (“I looked at it—everything seems okay” could fit some people’s definition of “checking.”) In the same way, “regular intervals” may express a variety of meanings; it is far more accurate and easy to interpret if the definition offers specific time intervals. “Every three or four days” of checking would be far less predictable than “once a month, on the final work day of the month,” for example.

In manufacturing fuel gages, it may be important to check the position of the needle on the gage and to define where it should be when the gage is measuring “empty.” We’ve all had fuel gages on our cars that indicated “empty” when the needle was actually above or even below the “E” on the gage, and the fuel tank actually became empty. We simply become used to the exact point—perhaps only after running out of gas a time or two. The manufacturer, on the other hand, needs to have assurance that a fuel gage is consistent and predictable in its announcement of “empty.” An operational definition might indicate that the needle must be touching or covering the “E,” for example, or that it will indicate an empty container only when the needle reaches a point below, and not touching, the “E.”

The Challenge

Establishing operational definitions and working procedures may represent only the first step to improvement. Once the quality team has invested the time to create, review, accept, and distribute these definitions, the challenge becomes to ensure that operators, technicians, and managers adhere to them. This can present quite a challenge in most cases.

One opportunity to evaluate whether team members are utilizing the operational definitions is during measurement systems analysis studies. The administrator of a gage R&R study is responsible for more than just collecting the measurement data. This role, in addition to conducting the study with the appraisers, can include observing and noting how operators are taking their measurements. This gives firsthand observations of the measurement procedures the operators are actually using. A savvy administrator of the study can note the differences in the approved operational definitions and procedures versus what may be being used on the shop floor. Improvement plans and retraining of the operators can be scheduled if the operational definitions are not being followed. Additional insight can be gathered from the operators to understand why they have not been following the set operational definitions. Maybe the way they are written has caused confusion, or they need to be rewritten to better match the measurement environment.

Not only are operational definitions essential to establishing a measurement system, but they also provide a diagnostic tool. When a system appears to be changing, the cause may be a change in the ways in which operational definitions are used. Without reliable operational definitions in place, data points may have wildly fluctuating patterns that do not reflect the reality of the system. Whenever a system is unstable, operational definitions and their use should be evaluated for their impact (Total Quality Tools, p. 171).

You may find that this evaluation brings with it the “Eureka!” moment that Sherlock himself experienced.

Looking for a reprint of this article?

From high-res PDFs to custom plaques, order your copy today!